“Scientists have known for centuries that a single study will not resolve a major issue. Indeed, a small sample study will not even resolve a minor issue. Thus, the foundation of science is the cumulation of knowledge from the results of many studies.” (Hunter et al. 1982, p. 10)

Meta-analysis is a central method for knowledge accumulation in many scientific fields (Aguinis et al. 2011c; Kepes et al. 2013). Similar to a narrative review, it serves as a synopsis of a research question or field. However, going beyond a narrative summary of key findings, a meta-analysis adds value in providing a quantitative assessment of the relationship between two target variables or the effectiveness of an intervention (Gurevitch et al. 2018). Also, it can be used to test competing theoretical assumptions against each other or to identify important moderators where the results of different primary studies differ from each other (Aguinis et al. 2011b; Bergh et al. 2016). Rooted in the synthesis of the effectiveness of medical and psychological interventions in the 1970s (Glass 2015; Gurevitch et al. 2018), meta-analysis is nowadays also an established method in management research and related fields.

The increasing importance of meta-analysis in management research has resulted in the publication of guidelines in recent years that discuss the merits and best practices in various fields, such as general management (Bergh et al. 2016; Combs et al. 2019; Gonzalez-Mulé and Aguinis 2018), international business (Steel et al. 2021), economics and finance (Geyer-Klingeberg et al. 2020; Havranek et al. 2020), marketing (Eisend 2017; Grewal et al. 2018), and organizational studies (DeSimone et al. 2020; Rudolph et al. 2020). These articles discuss existing and trending methods and propose solutions for often experienced problems. This editorial briefly summarizes the insights of these papers; provides a workflow of the essential steps in conducting a meta-analysis; suggests state-of-the art methodological procedures; and points to other articles for in-depth investigation. Thus, this article has two goals: (1) based on the findings of previous editorials and methodological articles, it defines methodological recommendations for meta-analyses submitted to Management Review Quarterly (MRQ); and (2) it serves as a practical guide for researchers who have little experience with meta-analysis as a method but plan to conduct one in the future.

The first step in conducting a meta-analysis, as with any other empirical study, is the definition of the research question. Most importantly, the research question determines the realm of constructs to be considered or the type of interventions whose effects shall be analyzed. When defining the research question, two hurdles might develop. First, when defining an adequate study scope, researchers must consider that the number of publications has grown exponentially in many fields of research in recent decades (Fortunato et al. 2018). On the one hand, a larger number of studies increases the potentially relevant literature basis and enables researchers to conduct meta-analyses. Conversely, scanning a large amount of studies that could be potentially relevant for the meta-analysis results in a perhaps unmanageable workload. Thus, Steel et al. (2021) highlight the importance of balancing manageability and relevance when defining the research question. Second, similar to the number of primary studies also the number of meta-analyses in management research has grown strongly in recent years (Geyer-Klingeberg et al. 2020; Rauch 2020; Schwab 2015). Therefore, it is likely that one or several meta-analyses for many topics of high scholarly interest already exist. However, this should not deter researchers from investigating their research questions. One possibility is to consider moderators or mediators of a relationship that have previously been ignored. For example, a meta-analysis about startup performance could investigate the impact of different ways to measure the performance construct (e.g., growth vs. profitability vs. survival time) or certain characteristics of the founders as moderators. Another possibility is to replicate previous meta-analyses and test whether their findings can be confirmed with an updated sample of primary studies or newly developed methods. Frequent replications and updates of meta-analyses are important contributions to cumulative science and are increasingly called for by the research community (Anderson & Kichkha 2017; Steel et al. 2021). Consistent with its focus on replication studies (Block and Kuckertz 2018), MRQ therefore also invites authors to submit replication meta-analyses.

Similar to conducting a literature review, the search process of a meta-analysis should be systematic, reproducible, and transparent, resulting in a sample that includes all relevant studies (Fisch and Block 2018; Gusenbauer and Haddaway 2020). There are several identification strategies for relevant primary studies when compiling meta-analytical datasets (Harari et al. 2020). First, previous meta-analyses on the same or a related topic may provide lists of included studies that offer a good starting point to identify and become familiar with the relevant literature. This practice is also applicable to topic-related literature reviews, which often summarize the central findings of the reviewed articles in systematic tables. Both article types likely include the most prominent studies of a research field. The most common and important search strategy, however, is a keyword search in electronic databases (Harari et al. 2020). This strategy will probably yield the largest number of relevant studies, particularly so-called ‘grey literature’, which may not be considered by literature reviews. Gusenbauer and Haddaway (2020) provide a detailed overview of 34 scientific databases, of which 18 are multidisciplinary or have a focus on management sciences, along with their suitability for literature synthesis. To prevent biased results due to the scope or journal coverage of one database, researchers should use at least two different databases (DeSimone et al. 2020; Martín-Martín et al. 2021; Mongeon & Paul-Hus 2016). However, a database search can easily lead to an overload of potentially relevant studies. For example, key term searches in Google Scholar for “entrepreneurial intention” and “firm diversification” resulted in more than 660,000 and 810,000 hits, respectively. Footnote 1 Therefore, a precise research question and precise search terms using Boolean operators are advisable (Gusenbauer and Haddaway 2020). Addressing the challenge of identifying relevant articles in the growing number of database publications, (semi)automated approaches using text mining and machine learning (Bosco et al. 2017; O’Mara-Eves et al. 2015; Ouzzani et al. 2016; Thomas et al. 2017) can also be promising and time-saving search tools in the future. Also, some electronic databases offer the possibility to track forward citations of influential studies and thereby identify further relevant articles. Finally, collecting unpublished or undetected studies through conferences, personal contact with (leading) scholars, or listservs can be strategies to increase the study sample size (Grewal et al. 2018; Harari et al. 2020; Pigott and Polanin 2020).

Next, researchers must decide which studies to include in the meta-analysis. Some guidelines for literature reviews recommend limiting the sample to studies published in renowned academic journals to ensure the quality of findings (e.g., Kraus et al. 2020). For meta-analysis, however, Steel et al. (2021) advocate for the inclusion of all available studies, including grey literature, to prevent selection biases based on availability, cost, familiarity, and language (Rothstein et al. 2005), or the “Matthew effect”, which denotes the phenomenon that highly cited articles are found faster than less cited articles (Merton 1968). Harrison et al. (2017) find that the effects of published studies in management are inflated on average by 30% compared to unpublished studies. This so-called publication bias or “file drawer problem” (Rosenthal 1979) results from the preference of academia to publish more statistically significant and less statistically insignificant study results. Owen and Li (2020) showed that publication bias is particularly severe when variables of interest are used as key variables rather than control variables. To consider the true effect size of a target variable or relationship, the inclusion of all types of research outputs is therefore recommended (Polanin et al. 2016). Different test procedures to identify publication bias are discussed subsequently in Step 7.

In addition to the decision of whether to include certain study types (i.e., published vs. unpublished studies), there can be other reasons to exclude studies that are identified in the search process. These reasons can be manifold and are primarily related to the specific research question and methodological peculiarities. For example, studies identified by keyword search might not qualify thematically after all, may use unsuitable variable measurements, or may not report usable effect sizes. Furthermore, there might be multiple studies by the same authors using similar datasets. If they do not differ sufficiently in terms of their sample characteristics or variables used, only one of these studies should be included to prevent bias from duplicates (Wood 2008; see this article for a detection heuristic).

In general, the screening process should be conducted stepwise, beginning with a removal of duplicate citations from different databases, followed by abstract screening to exclude clearly unsuitable studies and a final full-text screening of the remaining articles (Pigott and Polanin 2020). A graphical tool to systematically document the sample selection process is the PRISMA flow diagram (Moher et al. 2009). Page et al. (2021) recently presented an updated version of the PRISMA statement, including an extended item checklist and flow diagram to report the study process and findings.

The two most common meta-analytical effect size measures in management studies are (z-transformed) correlation coefficients and standardized mean differences (Aguinis et al. 2011a; Geyskens et al. 2009). However, meta-analyses in management science and related fields may not be limited to those two effect size measures but rather depend on the subfield of investigation (Borenstein 2009; Stanley and Doucouliagos 2012). In economics and finance, researchers are more interested in the examination of elasticities and marginal effects extracted from regression models than in pure bivariate correlations (Stanley and Doucouliagos 2012). Regression coefficients can also be converted to partial correlation coefficients based on their t-statistics to make regression results comparable across studies (Stanley and Doucouliagos 2012). Although some meta-analyses in management research have combined bivariate and partial correlations in their study samples, Aloe (2015) and Combs et al. (2019) advise researchers not to use this practice. Most importantly, they argue that the effect size strength of partial correlations depends on the other variables included in the regression model and is therefore incomparable to bivariate correlations (Schmidt and Hunter 2015), resulting in a possible bias of the meta-analytic results (Roth et al. 2018). We endorse this opinion. If at all, we recommend separate analyses for each measure. In addition to these measures, survival rates, risk ratios or odds ratios, which are common measures in medical research (Borenstein 2009), can be suitable effect sizes for specific management research questions, such as understanding the determinants of the survival of startup companies. To summarize, the choice of a suitable effect size is often taken away from the researcher because it is typically dependent on the investigated research question as well as the conventions of the specific research field (Cheung and Vijayakumar 2016).

After having defined the primary effect size measure for the meta-analysis, it might become necessary in the later coding process to convert study findings that are reported in effect sizes that are different from the chosen primary effect size. For example, a study might report only descriptive statistics for two study groups but no correlation coefficient, which is used as the primary effect size measure in the meta-analysis. Different effect size measures can be harmonized using conversion formulae, which are provided by standard method books such as Borenstein et al. (2009) or Lipsey and Wilson (2001). There also exist online effect size calculators for meta-analysis. Footnote 2

Choosing which meta-analytical method to use is directly connected to the research question of the meta-analysis. Research questions in meta-analyses can address a relationship between constructs or an effect of an intervention in a general manner, or they can focus on moderating or mediating effects. There are four meta-analytical methods that are primarily used in contemporary management research (Combs et al. 2019; Geyer-Klingeberg et al. 2020), which allow the investigation of these different types of research questions: traditional univariate meta-analysis, meta-regression, meta-analytic structural equation modeling, and qualitative meta-analysis (Hoon 2013). While the first three are quantitative, the latter summarizes qualitative findings. Table 1 summarizes the key characteristics of the three quantitative methods.

In its traditional form, a meta-analysis reports a weighted mean effect size for the relationship or intervention of investigation and provides information on the magnitude of variance among primary studies (Aguinis et al. 2011c; Borenstein et al. 2009). Accordingly, it serves as a quantitative synthesis of a research field (Borenstein et al. 2009; Geyskens et al. 2009). Prominent traditional approaches have been developed, for example, by Hedges and Olkin (1985) or Hunter and Schmidt (1990, 2004). However, going beyond its simple summary function, the traditional approach has limitations in explaining the observed variance among findings (Gonzalez-Mulé and Aguinis 2018). To identify moderators (or boundary conditions) of the relationship of interest, meta-analysts can create subgroups and investigate differences between those groups (Borenstein and Higgins 2013; Hunter and Schmidt 2004). Potential moderators can be study characteristics (e.g., whether a study is published vs. unpublished), sample characteristics (e.g., study country, industry focus, or type of survey/experiment participants), or measurement artifacts (e.g., different types of variable measurements). The univariate approach is thus suitable to identify the overall direction of a relationship and can serve as a good starting point for additional analyses. However, due to its limitations in examining boundary conditions and developing theory, the univariate approach on its own is currently oftentimes viewed as not sufficient (Rauch 2020; Shaw and Ertug 2017).

Meta-regression analysis (Hedges and Olkin 1985; Lipsey and Wilson 2001; Stanley and Jarrell 1989) aims to investigate the heterogeneity among observed effect sizes by testing multiple potential moderators simultaneously. In meta-regression, the coded effect size is used as the dependent variable and is regressed on a list of moderator variables. These moderator variables can be categorical variables as described previously in the traditional univariate approach or (semi)continuous variables such as country scores that are merged with the meta-analytical data. Thus, meta-regression analysis overcomes the disadvantages of the traditional approach, which only allows us to investigate moderators singularly using dichotomized subgroups (Combs et al. 2019; Gonzalez-Mulé and Aguinis 2018). These possibilities allow a more fine-grained analysis of research questions that are related to moderating effects. However, Schmidt (2017) critically notes that the number of effect sizes in the meta-analytical sample must be sufficiently large to produce reliable results when investigating multiple moderators simultaneously in a meta-regression. For further reading, Tipton et al. (2019) outline the technical, conceptual, and practical developments of meta-regression over the last decades. Gonzalez-Mulé and Aguinis (2018) provide an overview of methodological choices and develop evidence-based best practices for future meta-analyses in management using meta-regression.

MASEM is a combination of meta-analysis and structural equation modeling and allows to simultaneously investigate the relationships among several constructs in a path model. Researchers can use MASEM to test several competing theoretical models against each other or to identify mediation mechanisms in a chain of relationships (Bergh et al. 2016). This method is typically performed in two steps (Cheung and Chan 2005): In Step 1, a pooled correlation matrix is derived, which includes the meta-analytical mean effect sizes for all variable combinations; Step 2 then uses this matrix to fit the path model. While MASEM was based primarily on traditional univariate meta-analysis to derive the pooled correlation matrix in its early years (Viswesvaran and Ones 1995), more advanced methods, such as the GLS approach (Becker 1992, 1995) or the TSSEM approach (Cheung and Chan 2005), have been subsequently developed. Cheung (2015a) and Jak (2015) provide an overview of these approaches in their books with exemplary code. For datasets with more complex data structures, Wilson et al. (2016) also developed a multilevel approach that is related to the TSSEM approach in the second step. Bergh et al. (2016) discuss nine decision points and develop best practices for MASEM studies.

While the approaches explained above focus on quantitative outcomes of empirical studies, qualitative meta-analysis aims to synthesize qualitative findings from case studies (Hoon 2013; Rauch et al. 2014). The distinctive feature of qualitative case studies is their potential to provide in-depth information about specific contextual factors or to shed light on reasons for certain phenomena that cannot usually be investigated by quantitative studies (Rauch 2020; Rauch et al. 2014). In a qualitative meta-analysis, the identified case studies are systematically coded in a meta-synthesis protocol, which is then used to identify influential variables or patterns and to derive a meta-causal network (Hoon 2013). Thus, the insights of contextualized and typically nongeneralizable single studies are aggregated to a larger, more generalizable picture (Habersang et al. 2019). Although still the exception, this method can thus provide important contributions for academics in terms of theory development (Combs et al., 2019; Hoon 2013) and for practitioners in terms of evidence-based management or entrepreneurship (Rauch et al. 2014). Levitt (2018) provides a guide and discusses conceptual issues for conducting qualitative meta-analysis in psychology, which is also useful for management researchers.

Software solutions to perform meta-analyses range from built-in functions or additional packages of statistical software to software purely focused on meta-analyses and from commercial to open-source solutions. However, in addition to personal preferences, the choice of the most suitable software depends on the complexity of the methods used and the dataset itself (Cheung and Vijayakumar 2016). Meta-analysts therefore must carefully check if their preferred software is capable of performing the intended analysis.

Among commercial software providers, Stata (from version 16 on) offers built-in functions to perform various meta-analytical analyses or to produce various plots (Palmer and Sterne 2016). For SPSS and SAS, there exist several macros for meta-analyses provided by scholars, such as David B. Wilson or Andy P. Field and Raphael Gillet (Field and Gillett 2010). Footnote 3 Footnote 4 For researchers using the open-source software R (R Core Team 2021), Polanin et al. (2017) provide an overview of 63 meta-analysis packages and their functionalities. For new users, they recommend the package metafor (Viechtbauer 2010), which includes most necessary functions and for which the author Wolfgang Viechtbauer provides tutorials on his project website. Footnote 5 Footnote 6 In addition to packages and macros for statistical software, templates for Microsoft Excel have also been developed to conduct simple meta-analyses, such as Meta-Essentials by Suurmond et al. (2017). Footnote 7 Finally, programs purely dedicated to meta-analysis also exist, such as Comprehensive Meta-Analysis (Borenstein et al. 2013) or RevMan by The Cochrane Collaboration (2020).

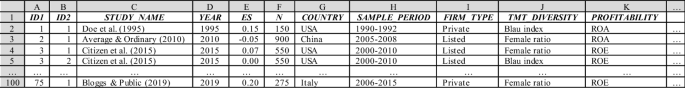

The first step in the coding process is the design of the coding sheet. A universal template does not exist because the design of the coding sheet depends on the methods used, the respective software, and the complexity of the research design. For univariate meta-analysis or meta-regression, data are typically coded in wide format. In its simplest form, when investigating a correlational relationship between two variables using the univariate approach, the coding sheet would contain a column for the study name or identifier, the effect size coded from the primary study, and the study sample size. However, such simple relationships are unlikely in management research because the included studies are typically not identical but differ in several respects. With more complex data structures or moderator variables being investigated, additional columns are added to the coding sheet to reflect the data characteristics. These variables can be coded as dummy, factor, or (semi)continuous variables and later used to perform a subgroup analysis or meta regression. For MASEM, the required data input format can deviate depending on the method used (e.g., TSSEM requires a list of correlation matrices as data input). For qualitative meta-analysis, the coding scheme typically summarizes the key qualitative findings and important contextual and conceptual information (see Hoon (2013) for a coding scheme for qualitative meta-analysis). Figure 1 shows an exemplary coding scheme for a quantitative meta-analysis on the correlational relationship between top-management team diversity and profitability. In addition to effect and sample sizes, information about the study country, firm type, and variable operationalizations are coded. The list could be extended by further study and sample characteristics.

It is generally important to consider the intended research model and relevant nontarget variables before coding a meta-analytic dataset. For example, study characteristics can be important moderators or function as control variables in a meta-regression model. Similarly, control variables may be relevant in a MASEM approach to reduce confounding bias. Coding additional variables or constructs subsequently can be arduous if the sample of primary studies is large. However, the decision to include respective moderator or control variables, as in any empirical analysis, should always be based on strong (theoretical) rationales about how these variables can impact the investigated effect (Bernerth and Aguinis 2016; Bernerth et al. 2018; Thompson and Higgins 2002). While substantive moderators refer to theoretical constructs that act as buffers or enhancers of a supposed causal process, methodological moderators are features of the respective research designs that denote the methodological context of the observations and are important to control for systematic statistical particularities (Rudolph et al. 2020). Havranek et al. (2020) provide a list of recommended variables to code as potential moderators. While researchers may have clear expectations about the effects for some of these moderators, the concerns for other moderators may be tentative, and moderator analysis may be approached in a rather exploratory fashion. Thus, we argue that researchers should make full use of the meta-analytical design to obtain insights about potential context dependence that a primary study cannot achieve.

A long-debated issue in conducting meta-analyses is whether to use only one or all available effect sizes for the same construct within a single primary study. For meta-analyses in management research, this question is fundamental because many empirical studies, particularly those relying on company databases, use multiple variables for the same construct to perform sensitivity analyses, resulting in multiple relevant effect sizes. In this case, researchers can either (randomly) select a single value, calculate a study average, or use the complete set of effect sizes (Bijmolt and Pieters 2001; López-López et al. 2018). Multiple effect sizes from the same study enrich the meta-analytic dataset and allow us to investigate the heterogeneity of the relationship of interest, such as different variable operationalizations (López-López et al. 2018; Moeyaert et al. 2017). However, including more than one effect size from the same study violates the independency assumption of observations (Cheung 2019; López-López et al. 2018), which can lead to biased results and erroneous conclusions (Gooty et al. 2021). We follow the recommendation of current best practice guides to take advantage of using all available effect size observations but to carefully consider interdependencies using appropriate methods such as multilevel models, panel regression models, or robust variance estimation (Cheung 2019; Geyer-Klingeberg et al. 2020; Gooty et al. 2021; López-López et al. 2018; Moeyaert et al. 2017).

Before conducting the primary analysis, some preliminary sensitivity analyses might be necessary, which should ensure the robustness of the meta-analytical findings (Rudolph et al. 2020). First, influential outlier observations could potentially bias the observed results, particularly if the number of total effect sizes is small. Several statistical methods can be used to identify outliers in meta-analytical datasets (Aguinis et al. 2013; Viechtbauer and Cheung 2010). However, there is a debate about whether to keep or omit these observations. Anyhow, relevant studies should be closely inspected to infer an explanation about their deviating results. As in any other primary study, outliers can be a valid representation, albeit representing a different population, measure, construct, design or procedure. Thus, inferences about outliers can provide the basis to infer potential moderators (Aguinis et al. 2013; Steel et al. 2021). On the other hand, outliers can indicate invalid research, for instance, when unrealistically strong correlations are due to construct overlap (i.e., lack of a clear demarcation between independent and dependent variables), invalid measures, or simply typing errors when coding effect sizes. An advisable step is therefore to compare the results both with and without outliers and base the decision on whether to exclude outlier observations with careful consideration (Geyskens et al. 2009; Grewal et al. 2018; Kepes et al. 2013). However, instead of simply focusing on the size of the outlier, its leverage should be considered. Thus, Viechtbauer and Cheung (2010) propose considering a combination of standardized deviation and a study’s leverage.

Second, as mentioned in the context of a literature search, potential publication bias may be an issue. Publication bias can be examined in multiple ways (Rothstein et al. 2005). First, the funnel plot is a simple graphical tool that can provide an overview of the effect size distribution and help to detect publication bias (Stanley and Doucouliagos 2010). A funnel plot can also support in identifying potential outliers. As mentioned above, a graphical display of deviation (e.g., studentized residuals) and leverage (Cook’s distance) can help detect the presence of outliers and evaluate their influence (Viechtbauer and Cheung 2010). Moreover, several statistical procedures can be used to test for publication bias (Harrison et al. 2017; Kepes et al. 2012), including subgroup comparisons between published and unpublished studies, Begg and Mazumdar’s (1994) rank correlation test, cumulative meta-analysis (Borenstein et al. 2009), the trim and fill method (Duval and Tweedie 2000a, b), Egger et al.’s (1997) regression test, failsafe N (Rosenthal 1979), or selection models (Hedges and Vevea 2005; Vevea and Woods 2005). In examining potential publication bias, Kepes et al. (2012) and Harrison et al. (2017) both recommend not relying only on a single test but rather using multiple conceptionally different test procedures (i.e., the so-called “triangulation approach”).

After controlling and correcting for the potential presence of impactful outliers or publication bias, the next step in meta-analysis is the primary analysis, where meta-analysts must decide between two different types of models that are based on different assumptions: fixed-effects and random-effects (Borenstein et al. 2010). Fixed-effects models assume that all observations share a common mean effect size, which means that differences are only due to sampling error, while random-effects models assume heterogeneity and allow for a variation of the true effect sizes across studies (Borenstein et al. 2010; Cheung and Vijayakumar 2016; Hunter and Schmidt 2004). Both models are explained in detail in standard textbooks (e.g., Borenstein et al. 2009; Hunter and Schmidt 2004; Lipsey and Wilson 2001).

In general, the presence of heterogeneity is likely in management meta-analyses because most studies do not have identical empirical settings, which can yield different effect size strengths or directions for the same investigated phenomenon. For example, the identified studies have been conducted in different countries with different institutional settings, or the type of study participants varies (e.g., students vs. employees, blue-collar vs. white-collar workers, or manufacturing vs. service firms). Thus, the vast majority of meta-analyses in management research and related fields use random-effects models (Aguinis et al. 2011a). In a meta-regression, the random-effects model turns into a so-called mixed-effects model because moderator variables are added as fixed effects to explain the impact of observed study characteristics on effect size variations (Raudenbush 2009).

The final step in performing a meta-analysis is reporting its results. Most importantly, all steps and methodological decisions should be comprehensible to the reader. DeSimone et al. (2020) provide an extensive checklist for journal reviewers of meta-analytical studies. This checklist can also be used by authors when performing their analyses and reporting their results to ensure that all important aspects have been addressed. Alternative checklists are provided, for example, by Appelbaum et al. (2018) or Page et al. (2021). Similarly, Levitt et al. (2018) provide a detailed guide for qualitative meta-analysis reporting standards.

For quantitative meta-analyses, tables reporting results should include all important information and test statistics, including mean effect sizes; standard errors and confidence intervals; the number of observations and study samples included; and heterogeneity measures. If the meta-analytic sample is rather small, a forest plot provides a good overview of the different findings and their accuracy. However, this figure will be less feasible for meta-analyses with several hundred effect sizes included. Also, results displayed in the tables and figures must be explained verbally in the results and discussion sections. Most importantly, authors must answer the primary research question, i.e., whether there is a positive, negative, or no relationship between the variables of interest, or whether the examined intervention has a certain effect. These results should be interpreted with regard to their magnitude (or significance), both economically and statistically. However, when discussing meta-analytical results, authors must describe the complexity of the results, including the identified heterogeneity and important moderators, future research directions, and theoretical relevance (DeSimone et al. 2019). In particular, the discussion of identified heterogeneity and underlying moderator effects is critical; not including this information can lead to false conclusions among readers, who interpret the reported mean effect size as universal for all included primary studies and ignore the variability of findings when citing the meta-analytic results in their research (Aytug et al. 2012; DeSimone et al. 2019).

Another increasingly important topic is the public provision of meta-analytical datasets and statistical codes via open-source repositories. Open-science practices allow for results validation and for the use of coded data in subsequent meta-analyses (Polanin et al. 2020), contributing to the development of cumulative science. Steel et al. (2021) refer to open science meta-analyses as a step towards “living systematic reviews” (Elliott et al. 2017) with continuous updates in real time. MRQ supports this development and encourages authors to make their datasets publicly available. Moreau and Gamble (2020), for example, provide various templates and video tutorials to conduct open science meta-analyses. There exist several open science repositories, such as the Open Science Foundation (OSF; for a tutorial, see Soderberg 2018), to preregister and make documents publicly available. Furthermore, several initiatives in the social sciences have been established to develop dynamic meta-analyses, such as metaBUS (Bosco et al. 2015, 2017), MetaLab (Bergmann et al. 2018), or PsychOpen CAMA (Burgard et al. 2021).

This editorial provides a comprehensive overview of the essential steps in conducting and reporting a meta-analysis with references to more in-depth methodological articles. It also serves as a guide for meta-analyses submitted to MRQ and other management journals. MRQ welcomes all types of meta-analyses from all subfields and disciplines of management research.

Gusenbauer and Haddaway (2020), however, point out that Google Scholar is not appropriate as a primary search engine due to a lack of reproducibility of search results.